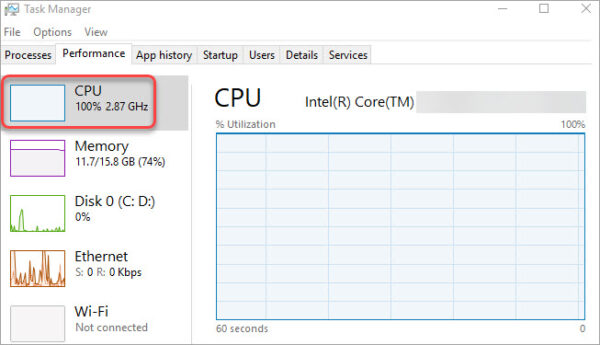

To summarize, use DataTables only when you know that the amount of entries won't exceed some hundreds of thousands. and shortly the catastrophic OutOfMemoryException error. To see the difference, do the same using DataTables, then you will see spikes instead of one steady line. You will notice that while it's running, there will be no spikes but one steady line, which proves that the code will cause no memory leaks. The moment you start bulk importing, it's worth monitoring the memory from the Task Manager. Task.Run( async () => await bulkloader.BulkLoadTableAsync(streamReader, " RandomNumbers")).Wait() Var file = ConfigurationManager.AppSettings The client will only need to do the following:Ĭopy Code public static void Main(string args) Public new int Depth Įof = String.IsNullOrEmpty(CurrentRecord) When working with big data, you will need to update this property:Ĭopy Code public abstract class ObjectDataReader : IDataReader With this way, we can use that refined class, without importing all the methods we don't need.Īdditionally, there are two things to consider: SqlBulkCopy.BulkCopyTimeout Property A good solution is to isolate only those methods we need, by hiding the methods we don't need, with the new operator. If you attempt to use the base class that inherits from IDataReader, the code will be massive. You will notice that this interface includes many methods. The first step is to create a class that inherits from IDataReader. To make it work with big data, we will need to use the second one, WriteToServerAsync(IDataReader). Likely, the WriteToServerAsync exhibits a polymorphic behavior. The asynchronous version of WriteToServer, which copies all rows in the supplied DataTable to a destination table specified by the DestinationTableName property of the SqlBulkCopy object.Ĭopies all rows in the supplied IDataReader to a destination table specified by the DestinationTableName property of the SqlBulkCopy object. If we use them to load massive data, we will experience an OutOfMemoryException error, which clearly proves that the common language runtime cannot allocate enough contiguous memory to successfully perform an operation. Bring up the Task Manager and monitor the Memory, while it's running.ĭatatables are easy to use, however, they are also one of the best candidates for Memory Leaks.Modify the ConnectionString accordingly in the SqlBulkLoader project, as it needs to point to your local database.

Execute this so we can generate the file. Set up as StartUp project the FileGenerator. The application is constituted by two console applications.We need the database numbers with the table RandomNumbers. bak file that you need to import to your local database. However, one will need to take a different approach if we are dealing with big data files, i.e. You can use DataTables if you have some hundreds of thousands rows. We use SqlBulkCopy to transfer data to the SQL Server.

0 kommentar(er)

0 kommentar(er)